客户端代码:

from langserve import RemoteRunnable

from langchain_core.messages import BaseMessage

chat_history = [HumanMessage(content=“Hello”), AIMessage(content=“Hi”)]

remote_chain = RemoteRunnable(“http://127.0.0.1:8000/agent/”)

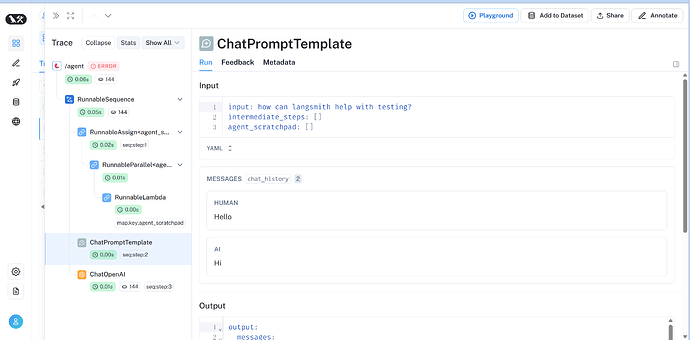

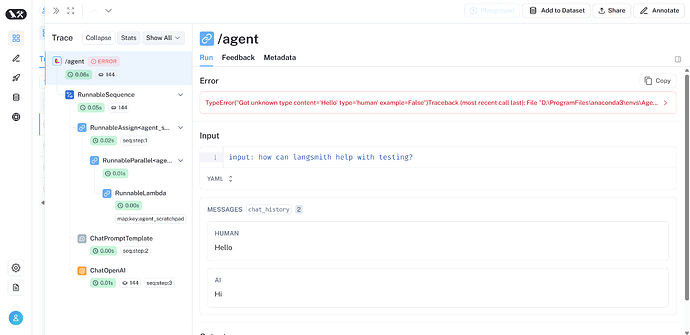

remote_chain.invoke({

“input”: “how can langsmith help with testing?”,

“chat_history”: chat_history

})

服务端代码:

from typing import List

from fastapi import FastAPI

from langchain_core.prompts import ChatPromptTemplate

from langchain_openai import ChatOpenAI

from langchain_community.document_loaders import WebBaseLoader

from langchain_openai import OpenAIEmbeddings

from langchain_community.vectorstores import FAISS

from langchain_text_splitters import RecursiveCharacterTextSplitter

from langchain.tools.retriever import create_retriever_tool

from langchain_community.tools.tavily_search import TavilySearchResults

from langchain_openai import ChatOpenAI

from langchain import hub

from langchain.agents import create_openai_functions_agent

from langchain.agents import AgentExecutor

from langchain.pydantic_v1 import BaseModel, Field

from langchain_core.messages import BaseMessage

from langserve import add_routes

-1 配置langsmith

import os

from uuid import uuid4

unique_id = uuid4().hex[0:8]

os.environ[“LANGCHAIN_PROJECT”] = f"Tracing Walkthrough - {unique_id}"

os.environ[“LANGCHAIN_ENDPOINT”] = “https://api.smith.langchain.com”

0 Load API keys

from dotenv import load_dotenv

import os

load_dotenv()

openai_api_key = os.getenv(“openai_api_key”)

tavily_api_key = os.getenv(“tavily_api_key”)

1. Load Retriever

loader = WebBaseLoader(“LangSmith User Guide | 🦜️🛠️ LangSmith”)

docs = loader.load()

text_splitter = RecursiveCharacterTextSplitter()

documents = text_splitter.split_documents(docs)

embeddings = OpenAIEmbeddings()

vector = FAISS.from_documents(documents, embeddings)

retriever = vector.as_retriever()

2. Create Tools

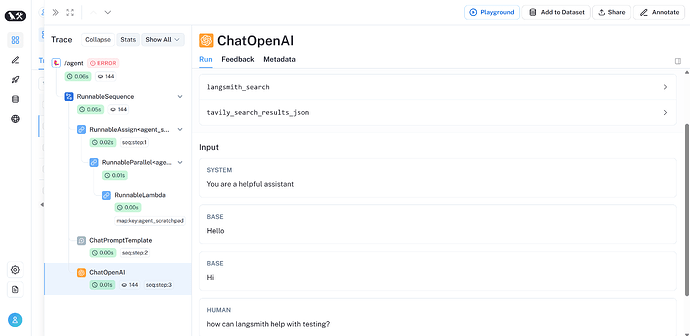

retriever_tool = create_retriever_tool(

retriever,

“langsmith_search”,

“Search for information about LangSmith. For any questions about LangSmith, you must use this tool!”,

)

search = TavilySearchResults(tavily_api_key=tavily_api_key)

tools = [retriever_tool, search]

3. Create Agent

prompt = hub.pull(“hwchase17/openai-functions-agent”)

llm = ChatOpenAI(model=“gpt-3.5-turbo”, temperature=0, openai_api_key=openai_api_key)

agent = create_openai_functions_agent(llm, tools, prompt)

agent_executor = AgentExecutor(agent=agent, tools=tools, verbose=True)

4. App definition

app = FastAPI(

title=“LangChain Server”,

version=“1.0”,

description=“A simple API server using LangChain’s Runnable interfaces”,

)

5. Adding chain route

class Input(BaseModel):

input: str

# chat_history: List[BaseMessage] = []

chat_history: List[BaseMessage] = Field(

…,

extra={“widget”: {“type”: “chat”, “input”: “location”}},

)

class Output(BaseModel):

output: str

add_routes(

app,

llm,

path=“/try”,

)

add_routes(

app,

agent_executor.with_types(input_type=Input, output_type=Output),

path=“/agent”,

)

if name == “main”:

import uvicorn

uvicorn.run(app, host="localhost", port=8000)

# print(agent_executor.with_types(input_type=Input, output_type=Output).with_config(config).input_schema)